Six months after admitting that ChatGPT 4o was a little too enthusiastic with its relentless praise for users, Sam Altman is bringing sexy back. The OpenAI boss says a new version of ChatGPT “that behaves more like what people liked about 4o” is coming in a few weeks, and it’ll get even better—or potentially much worse, depending on how you feeling about the idea—in December with the introduction of AI-powered “erotica.”

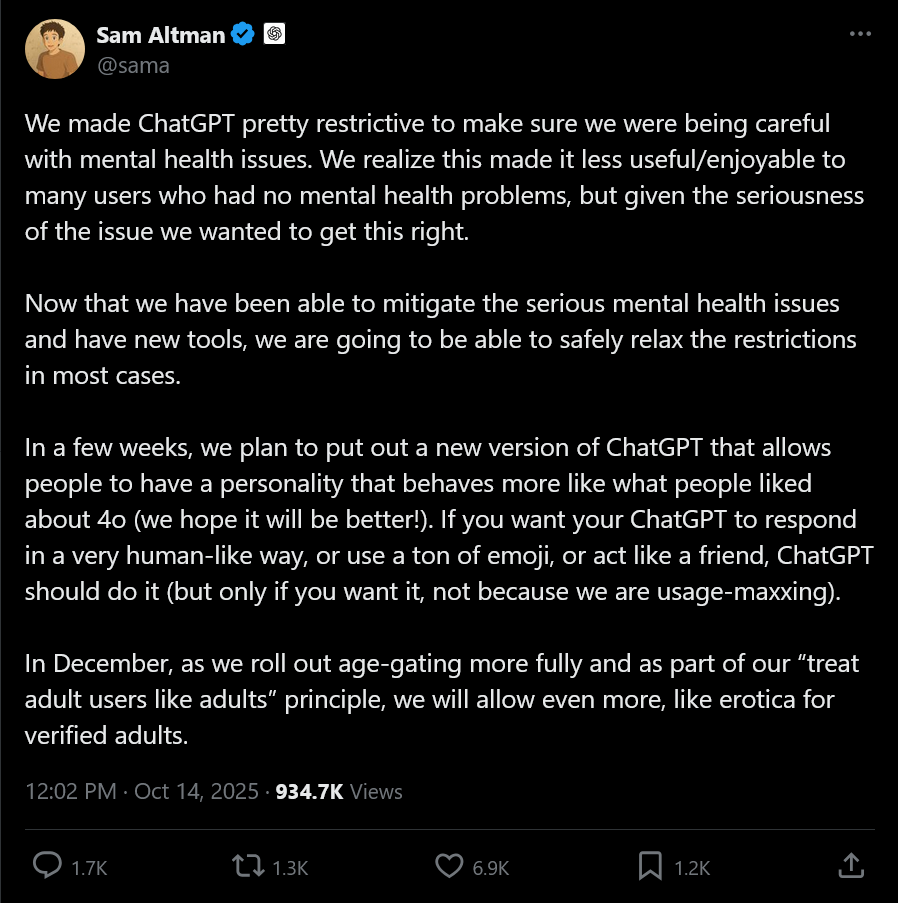

“We made ChatGPT pretty restrictive to make sure we were being careful with mental health issues,” Altman wrote on X. “We realize this made it less useful/enjoyable to many users who had no mental health problems, but given the seriousness of the issue we wanted to get this right. Now that we have been able to mitigate the serious mental health issues and have new tools, we are going to be able to safely relax the restrictions in most cases.”

It’s arguable that OpenAI has been anything but “careful” with the mental health impacts of its chatbots. The company was sued in August by the parents of a teenager who died of suicide after allegedly being encouraged and instructed on how to do so by ChatGPT. The following month, Altman said the software would be trained not to talk to teens about suicide or self-harm (possibly leading one to wonder why it took a lawsuit over a teen suicide to spark such a change), or to engage them in “flirtatious talk.”

At the same time, Altman said OpenAI aims to “treat our adult users like adults,” and that’s seemingly where this forthcoming new version comes in, as Altman repeated the phrase in today’s message.